https://wiki.apache.org/hadoop/Ozone

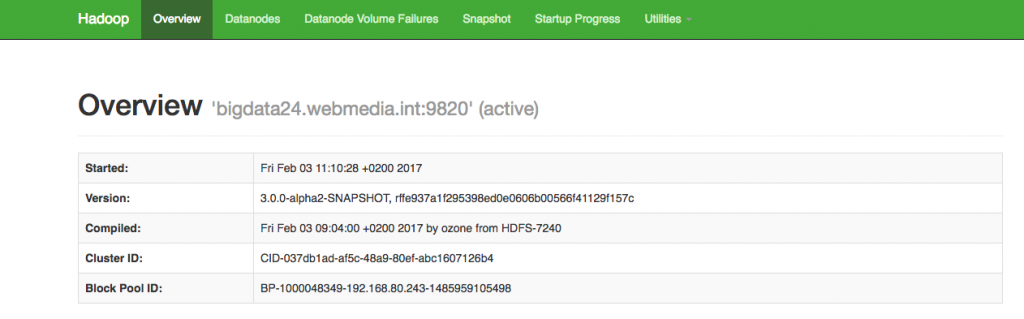

Downloaded last hadoop development source (hadoop-3.0.0-alpha2) switched to HDFS-7240 branch where ozone development is taking place. Build it – success.

[ozone@bigdata24 hadoop-3.0.0-alpha2-SNAPSHOT]$ ./bin/hdfs

Usage: hdfs [OPTIONS] SUBCOMMAND [SUBCOMMAND OPTIONS]

OPTIONS is none or any of:

–buildpaths attempt to add class files from build tree

–config dir Hadoop config directory

–daemon (start|status|stop) operate on a daemon

–debug turn on shell script debug mode

–help usage information

–hostnames list[,of,host,names] hosts to use in worker mode

–hosts filename list of hosts to use in worker mode

–loglevel level set the log4j level for this command

–workers turn on worker mode

SUBCOMMAND is one of:

balancer run a cluster balancing utility

cacheadmin configure the HDFS cache

classpath prints the class path needed to get the hadoop jar and the required libraries

crypto configure HDFS encryption zones

datanode run a DFS datanode

debug run a Debug Admin to execute HDFS debug commands

dfsadmin run a DFS admin client

dfs run a filesystem command on the file system

diskbalancer Distributes data evenly among disks on a given node

envvars display computed Hadoop environment variables

erasurecode run a HDFS ErasureCoding CLI

fetchdt fetch a delegation token from the NameNode

fsck run a DFS filesystem checking utility

getconf get config values from configuration

groups get the groups which users belong to

haadmin run a DFS HA admin client

jmxget get JMX exported values from NameNode or DataNode.

journalnode run the DFS journalnode

lsSnapshottableDir list all snapshottable dirs owned by the current user

mover run a utility to move block replicas across storage types

namenode run the DFS namenode

nfs3 run an NFS version 3 gateway

oev apply the offline edits viewer to an edits file

oiv apply the offline fsimage viewer to an fsimage

oiv_legacy apply the offline fsimage viewer to a legacy fsimage

oz command line interface for ozone

portmap run a portmap service

scm run the Storage Container Manager service

secondarynamenode run the DFS secondary namenode

snapshotDiff diff two snapshots of a directory or diff the current directory contents with a snapshot

storagepolicies list/get/set block storage policies

version print the version

zkfc run the ZK Failover Controller daemon

As you can see new fancy attributes like oz and scm are there.

[ozone@bigdata24 hadoop-3.0.0-alpha2-SNAPSHOT]$ bin/hdfs oz ERROR: oz is not COMMAND nor fully qualified CLASSNAME. [ozone@bigdata24 hadoop-3.0.0-alpha2-SNAPSHOT]$ bin/hdfs scm Error: Could not find or load main class No luck. I was out of ideas so wrote to hadoop users list. No answers. After it I tried hadoop developers list and got help:

Hi Margus,

It looks like there might have been some error when merging trunk into HDFS-7240, which mistakenly

changed some entries in hdfs script. Thanks for the catch!

We will update the branch to fix it. In the meantime, as a quick fix, you can apply the attached

patch file and re-compile, OR do the following manually:

1. open hadoop-hdfs-project/hadoop-hdfs/src/main/bin/hdfs

2. between

oiv_legacy)

HADOOP_CLASSNAME=org.apache.hadoop.hdfs.tools.offlineImageViewer.OfflineImageViewer

;;

and

portmap)

HADOOP_SUBCMD_SUPPORTDAEMONIZATION="true"

HADOOP_CLASSNAME=org.apache.hadoop.portmap.Portmap

;;

add

oz)

HADOOP_CLASSNAME=org.apache.hadoop.ozone.web.ozShell.Shell

;;

3. change this line

CLASS='org.apache.hadoop.ozone.storage.StorageContainerManager'

to

HADOOP_CLASSNAME='org.apache.hadoop.ozone.storage.StorageContainerManager'

4. re-compile.

rebuild it and it helped.

Lets try to play whit a new toy.

[ozone@bigdata24 hadoop-3.0.0-alpha2-SNAPSHOT]$ ./bin/hdfs oz -v -createVolume http://127.0.0.1:9864/margusja -user ozone -quota 10GB -root

Volume name : margusja

{

"owner" : {

"name" : "ozone"

},

"quota" : {

"unit" : "GB",

"size" : 10

},

"volumeName" : "margusja",

"createdOn" : "Fri, 03 Feb 2017 10:13:39 GMT",

"createdBy" : "hdfs"

}

[ozone@bigdata24 hadoop-3.0.0-alpha2-SNAPSHOT]$ ./bin/hdfs oz -createBucket http://127.0.0.1:9864/margusja/demo -user ozone -v

Volume Name : margusja

Bucket Name : demo

{

"volumeName" : "margusja",

"bucketName" : "demo",

"acls" : null,

"versioning" : "DISABLED",

"storageType" : "DISK"

}

[ozone@bigdata24 hadoop-3.0.0-alpha2-SNAPSHOT]$ ./bin/hdfs oz -v -putKey http://127.0.0.1:9864/margusja/demo/key001 -file margusja.txt

Volume Name : margusja

Bucket Name : demo

Key Name : key001

File Hash : 4273b3664fcf8bd89fd2b6d25cdf64ae

[ozone@bigdata24 hadoop-3.0.0-alpha2-SNAPSHOT]$ ./bin/hdfs oz -v -putKey http://127.0.0.1:9864/margusja/demo/key002 -file margusja2.txt

Volume Name : margusja

Bucket Name : demo

Key Name : key002

[ozone@bigdata24 hadoop-3.0.0-alpha2-SNAPSHOT]$ ./bin/hdfs oz -v -listKey http://127.0.0.1:9864/margusja/demo/

Volume Name : margusja

bucket Name : demo

{

"version" : 0,

"md5hash" : "4273b3664fcf8bd89fd2b6d25cdf64ae",

"createdOn" : "Fri, 03 Feb 2017 12:25:43 +0200",

"size" : 21,

"keyName" : "key001"

}

{

"version" : 0,

"md5hash" : "4273b3664fcf8bd89fd2b6d25cdf64ae",

"createdOn" : "Fri, 03 Feb 2017 12:26:14 +0200",

"size" : 21,

"keyName" : "key002"

}

[ozone@bigdata24 hadoop-3.0.0-alpha2-SNAPSHOT]$

To compare with filesystem we created directory /margusja after it created subdirectory margusja/demo and finally added two files to margusja/demo/.

So the picture is smth like

/margusja (volume)

/margusja/demo (bucket)

/margusja/demo/margusja.txt (key001)

/margusja/demo/margusja2.txt (key002)