- Alusame uut sessiooni koos screeni nimetamisega – screen -S nimi

- olemasolevad sessioonid – screen -ls

- loobume olemasolevast aknast – CTRL+a+K

- suleme olemasoleva sessiooni – CTRL+a+d

- Kuvame listi olemasolevatest akentest akna alla ribale – CTRL+a+w

- Kuvame listi olemasolevatest akentest – CTRL+a+”

- Nimetame aktiivse akna – CTRL+a+A

- Aktiivse akna info – CTRL+a+i

Margusja testib

test sisu

—

Tervitades, Margus (Margusja) Roo

+372 51 48 780

http://margus.roo.ee

msn: margusja@kodila.ee

skype: margusja

Iphone-Android-Blackberry

A simple python webserver

[Thu Nov 04 22:24:04 margusja@IRack sudo python -m SimpleHTTPServer 80

Password:

Serving HTTP on 0.0.0.0 port 80 ...

192.168.1.67 - - [04/Nov/2010 22:26:50] "GET / HTTP/1.1" 200 -

192.168.1.67 - - [04/Nov/2010 22:26:51] code 404, message File not found

192.168.1.67 - - [04/Nov/2010 22:26:51] "GET /favicon.ico HTTP/1.1" 404 -

192.168.1.67 - - [04/Nov/2010 22:27:06] "GET /Pictures/ HTTP/1.1" 200 -

192.168.1.67 - - [04/Nov/2010 22:27:09] "GET /Pictures/1hacker.jpg HTTP/1.1" 200 -

192.168.1.67 - - [04/Nov/2010 22:28:13] "GET /Pictures/icon_defaultUser.png HTTP/1.1" 200 -

192.168.1.67 - - [04/Nov/2010 22:28:18] "GET /Pictures/IMG_0001.MOV HTTP/1.1" 200 -

Postgres pgpool-II

Pusisin siin kahe postgre andmebaasi peegeldamisega.

Seadistus ise lihtne – lihtsalt masin A (master DB ja PGPool-II) ja masin B (slave)

# Replication mode

replication_mode = true

# Load balancing mode, i.e., all SELECTs are load balanced.

# This is ignored if replication_mode is false.

load_balance_mode = false

# If true, operate in master/slave mode.

master_slave_mode = false

# backend_hostname, backend_port, backend_weight

# here are examples

backend_hostname0 = 'master'

backend_port0 = 5432

backend_weight0 = 1

backend_data_directory0 = '/var/lib/pgsql/data'

backend_hostname1 = 'slave'

backend_port1 = 5432

backend_weight1 = 1

backend_data_directory1 = '/var/lib/pgsql/data'

Pusisin mitu tundi ja ikka pgpool logis:

ERROR: pid 23466: pool_read_int: data does not match between between master(0) slot[1] (50331648)

ERROR: pid 23466: pool_do_auth: read auth kind failed

Lahendus peitus slave pg_hba.conf. Lihtsalt trustisin vajaliku IP pealt.

Väidetavalt Pgpool ei toeta clear text auth-i.

In the replication mode or master/slave mode, trust, clear text password, pam methods are supported. clear text password is not supported. md5 is supported by using "pool_passwd". Here are steps to enable md5 authentication:

Login as DB user and type "pg_md5 --md5auth " user name and md5 encrypted password is registered into pool_passwd. If pool_passwd does not exist yet, pg_md5 command will automatically create it for you.

The format of pool_passwd is "username:encrypted_passwd".

You need to also add appropreate md5 entry to pool_hba.conf. See Setting up pool_hba.conf for client authentication (HBA) for more details.

Please note that the user name and passowrd must be identical to those registered in PostgreSQL.

In all the other modes, trust, clear text password, crypt, md5, pam methods are supported.

pgpool-II does not support pg_hba.conf-like access controls. If the TCP/IP connection is enabled, pgpool-II accepts all the connections from any host. If needed, use iptables and such to control access from other hosts. (PostgreSQL server accepting pgpool-II connections can use pg_hba.conf, of course).

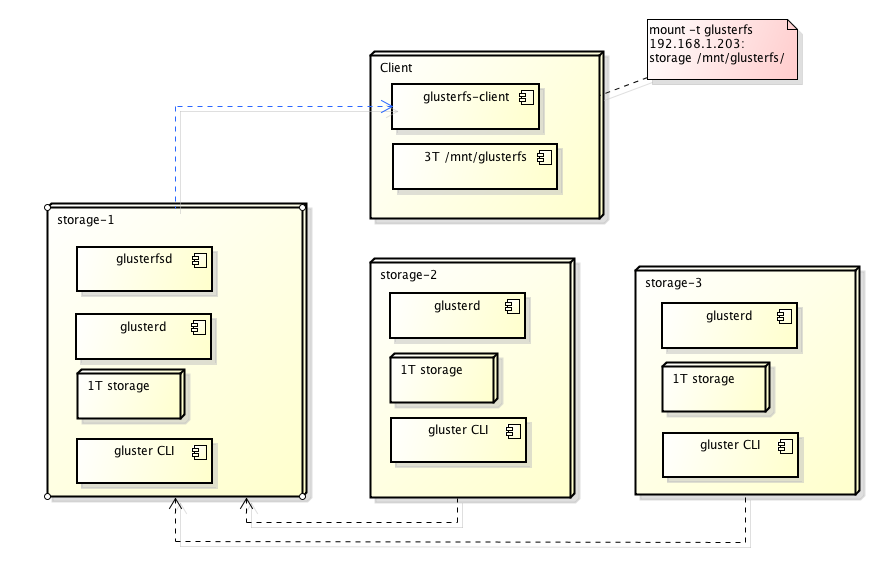

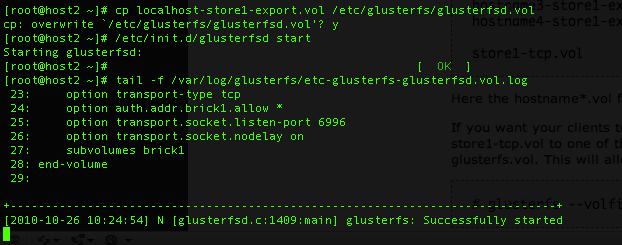

Glusterfs

Oli ka aeg. Kes see ikka tänapäeval ühe serveriga storaget peab. Igaljuhul olen ma oma esimese glusterfs instantsi käivitamise üle nii uhke, et lausa panen siia. Mine tea ehk lisan endale ka hiljem väikese how-to

storage1 masinas peab käima glasterfs-server

[root@storage1 ~]# /etc/init.d/glusterfsd status

glusterfsd (pid 23029 4386) is running...

[root@storage1 ~]#

loome esialgu kahest storagest koosneva volume (seda võib teha suvalises masinas kus glusterd käib kus on gluster CLI olemas)

[root@storage2 ~]# gluster volume create storage transport tcp 192.168.1.202:/mnt/storage 192.168.1.203:/mnt/storage

Creation of volume storage has been successful

[root@storage2 ~]# gluster volume info storage

Volume Name: storage

Type: Distribute

Status: Created

Number of Bricks: 2

Transport-type: tcp

Bricks:

Brick1: 192.168.1.202:/mnt/storage

Brick2: 192.168.1.203:/mnt/storage

[root@storage2 ~]#

Käivitame

[root@storage2 ~]# gluster volume start storage

Starting volume storage has been successful

Klient masinas mount-me glusterfs-i.

[root@client ~]# mount

/dev/xvda2 on / type ext3 (rw)

proc on /proc type proc (rw)

sysfs on /sys type sysfs (rw)

devpts on /dev/pts type devpts (rw,gid=5,mode=620)

/dev/xvda1 on /boot type ext3 (rw)

tmpfs on /dev/shm type tmpfs (rw)

none on /proc/sys/fs/binfmt_misc type binfmt_misc (rw)

sunrpc on /var/lib/nfs/rpc_pipefs type rpc_pipefs (rw)

[root@client ~]# mount -t glusterfs 192.168.1.203:storage /mnt/glusterfs/

[root@client ~]# mount

/dev/xvda2 on / type ext3 (rw)

proc on /proc type proc (rw)

sysfs on /sys type sysfs (rw)

devpts on /dev/pts type devpts (rw,gid=5,mode=620)

/dev/xvda1 on /boot type ext3 (rw)

tmpfs on /dev/shm type tmpfs (rw)

none on /proc/sys/fs/binfmt_misc type binfmt_misc (rw)

sunrpc on /var/lib/nfs/rpc_pipefs type rpc_pipefs (rw)

glusterfs#192.168.1.203:storage on /mnt/glusterfs type fuse (rw,allow_other,default_permissions,max_read=131072)

[root@client ~]# df -h

Filesystem Size Used Avail Use% Mounted on

/dev/xvda2 1.9G 1.3G 524M 71% /

/dev/xvda1 99M 13M 81M 14% /boot

tmpfs 250M 0 250M 0% /dev/shm

glusterfs#192.168.1.203:storage

942M 21M 873M 3% /mnt/glusterfs

lisame uue node

[root@storage2 ~]# gluster volume add-brick storage 192.168.1.201:/mnt/storage

Add Brick successful

[root@storage2 ~]# gluster volume info storage

Volume Name: storage

Type: Distribute

Status: Started

Number of Bricks: 3

Transport-type: tcp

Bricks:

Brick1: 192.168.1.202:/mnt/storage

Brick2: 192.168.1.203:/mnt/storage

Brick3: 192.168.1.201:/mnt/storage

Kliendi masinas peale mõninga aja möödumist

[root@client ~]# df -h

Filesystem Size Used Avail Use% Mounted on

/dev/xvda2 1.9G 1.3G 524M 71% /

/dev/xvda1 99M 13M 81M 14% /boot

tmpfs 250M 0 250M 0% /dev/shm

glusterfs#192.168.1.203:storage

1.2G 25M 1.1G 3% /mnt/glusterfs

Vajalikud pakid repost:

[root@host3 ~]# yum install libibverbs-devel fuse-devel fuse

php ja socketi avatud aja kontrollimine (curl)

Nagu teada on php execute time limit ei suuda kontrollida kaua avatud socketis aega kulutatakse.

Hea võimalus ka see aeg kontrolli all hoida on kasutada PHP cURL funktsionaalsust. Ma ei hakka pikalt siin erinevadi võimalusi lahti kirjutama, täpsemaks uurimiseks on alati php manuaalida – pigem spikker endale.

Testimiseks lõin 1G faili

dd if=/dev/zero of=rand.txt bs=1M count=1024

php script

2 // Margusja's curl testscript

3

4 // create a new cURL resource

5 $ch = curl_init();

6 curl_setopt( $ch, CURLOPT_CONNECTTIMEOUT, 1 ); // the maximum amount of time a socket spends on trying to connect to a host

7 curl_setopt( $ch, CURLOPT_TIMEOUT, 10 ); // the maximum amount of time a curl operation can execute for

8

9

10 // set URL and other appropriate options

11 curl_setopt($ch, CURLOPT_URL, "http://ftp.margusja.pri.ee/rand.txt");

12 curl_setopt($ch, CURLOPT_HEADER, false);

13

14 // grab URL and pass it to the browser

15 curl_exec($ch);

16

17 // close cURL resource, and free up system resources

18 curl_close($ch);

Ja tulemused erinevate väärtustega CURLOPT_TIMEOUT muutujale.

CURLOPT_TIMEOUT = 5;

[08:47:01 margusja@arendus arendus]$ time php ./curltest.php

real 0m5.063s

user 0m0.041s

sys 0m0.024s

CURLOPT_TIMEOUT = 10;

[08:47:08 margusja@arendus arendus]$ time php ./curltest.php

real 0m10.062s

user 0m0.053s

sys 0m0.096s

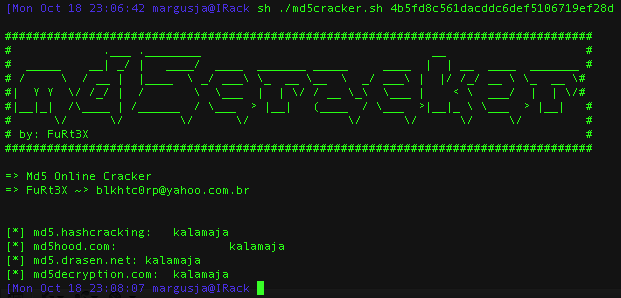

MD5 decrypt

DNS tool DIG hints

Olen aastaid dig nimelist tööriista kasutanud kuid AXFR päring oli mul kas siis teadmata või kuidagi kahe silma vahele jäänud. Lausa häbi.

Igaljuhul panen siia endale kirja, ehk tuleb veel midagi dig-i kohta huvitavat

AXFR – annab siis päritava domeeni kohta kogu seti juhul kui AXFR lubatud (allow-transfer { }; option plokis)

[Fri Oct 15 19:54:42 margusja@IRack dig AXFR roo.ee @ns2.okia.ee

; <<>> DiG 9.6.0-APPLE-P2 <<>> AXFR roo.ee @ns2.okia.ee

;; global options: +cmd

roo.ee. 14400 IN SOA ns3.okia.ee. hostmaster.roo.ee. 2010072003 14400 3600 1209600 86400

roo.ee. 14400 IN NS ns2.okia.ee.

roo.ee. 14400 IN NS ns3.okia.ee.

roo.ee. 14400 IN A 90.190.106.29

roo.ee. 14400 IN MX 10 mail.roo.ee.

roo.ee. 14400 IN TXT "v=spf1 a mx ip4:90.190.106.29 ~all"

ftp.roo.ee. 14400 IN A 90.190.106.29

localhost.roo.ee. 14400 IN A 127.0.0.1

mail.roo.ee. 14400 IN A 90.190.106.29

margus.roo.ee. 14400 IN CNAME roo.ee.

pop.roo.ee. 14400 IN A 90.190.106.29

smtp.roo.ee. 14400 IN A 90.190.106.29

www.roo.ee. 14400 IN A 90.190.106.29

roo.ee. 14400 IN SOA ns3.okia.ee. hostmaster.roo.ee. 2010072003 14400 3600 1209600 86400

;; Query time: 173 msec

;; SERVER: 67.207.134.150#53(67.207.134.150)

;; WHEN: Fri Oct 15 19:57:38 2010

;; XFR size: 14 records (messages 1, bytes 376)

[Fri Oct 15 19:57:38 margusja@IRack

PTR päring. Reverse päringud. Samuti hea näha ISP

[Fri Oct 15 21:12:59 margusja@IRack dig 23.106.190.90.in-addr.arpa PTR

; <<>> DiG 9.6.0-APPLE-P2 <<>> 23.106.190.90.in-addr.arpa PTR

;; global options: +cmd

;; Got answer:

;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 12135

;; flags: qr rd ra; QUERY: 1, ANSWER: 1, AUTHORITY: 2, ADDITIONAL: 3

;; QUESTION SECTION:

;23.106.190.90.in-addr.arpa. IN PTR

;; ANSWER SECTION:

23.106.190.90.in-addr.arpa. 10800 IN PTR 90-190-106-23-dbweb.ee.

;; AUTHORITY SECTION:

106.190.90.in-addr.arpa. 3497 IN NS ns2.elion.ee.

106.190.90.in-addr.arpa. 3497 IN NS ns.elion.ee.

;; ADDITIONAL SECTION:

ns.elion.ee. 4864 IN A 213.168.18.146

ns.elion.ee. 9032 IN AAAA 2001:7d0::7:215:60ff:fe0c:d578

ns2.elion.ee. 4272 IN A 195.50.193.163

;; Query time: 28 msec

;; SERVER: 192.168.1.254#53(192.168.1.254)

;; WHEN: Fri Oct 15 21:13:19 2010

;; MSG SIZE rcvd: 181

[Fri Oct 15 21:13:19 margusja@IRack

Või

[Fri Oct 15 21:13:19 margusja@IRack dig -x 90.190.106.23

; <<>> DiG 9.6.0-APPLE-P2 <<>> -x 90.190.106.23

;; global options: +cmd

;; Got answer:

;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 3581

;; flags: qr rd ra; QUERY: 1, ANSWER: 1, AUTHORITY: 2, ADDITIONAL: 3

;; QUESTION SECTION:

;23.106.190.90.in-addr.arpa. IN PTR

;; ANSWER SECTION:

23.106.190.90.in-addr.arpa. 10800 IN PTR 90-190-106-23-dbweb.ee.

;; AUTHORITY SECTION:

106.190.90.in-addr.arpa. 10386 IN NS ns.elion.ee.

106.190.90.in-addr.arpa. 10386 IN NS ns2.elion.ee.

;; ADDITIONAL SECTION:

ns.elion.ee. 4544 IN A 213.168.18.146

ns.elion.ee. 8686 IN AAAA 2001:7d0::7:215:60ff:fe0c:d578

ns2.elion.ee. 3929 IN A 195.50.193.163

;; Query time: 30 msec

;; SERVER: 192.168.1.254#53(192.168.1.254)

;; WHEN: Fri Oct 15 21:15:55 2010

;; MSG SIZE rcvd: 181

[Fri Oct 15 21:15:55 margusja@IRack

Leidsin lõpuks command line FTP kliendi – lftp

Olen aastaid kasutanud command line FTP kliendina ncfpt nimelist tooli koos sellega kaasnevate hädadega. Lõpuks sai isu täis ja sukeldusin internetiavarustesse parema ja minu vajadusi rahuldavat tööriista.

Selgus, et lahendus on olnud enamus aega kõigis minu kasutada olnud serverites – lftp.

Paari reaga enda jaoks siis nn. näidissessioon.

Download:

ibrahim@anfield:~$ mkdir dnld ; cd dnld

ibrahim@anfield:~/dnld$ lftp ftp://ftpsite.com/

set ftp:ssl-force true # Juhul kui on vajadus SFTP järele.

lftp ftpsite.com:~> user username

Password:

lftp ftpsite.com:~> cd /directory/to/download/

lftp ftpsite.com:~/directory/to/download/> mirror .

Upload:

ibrahim@anfield:~$ cd upld

ibrahim@anfield:~/upld$ lftp ftp://ftpsite.com/

lftp ftpsite.com:~> user username

Password:

lftp ftpsite.com:~> cd /directory/to/upload/

lftp ftpsite.com:~/directory/to/upload/> mirror -R .

Use ssl

[09:47:17 margusja@arendus cms]$ lftp

lftp :~> set ftp:ssl-force true

lftp :~> connect server.domain.ee

lftp server.domain.ee:~> user username

Password: