Informaatika koolis – virtuaalreaalsus

Informaatika koolis – IOT

IOT (Internet of things) ehk asjade internet. Internet siinkohal ei pea olema see internet, mida me silmas peame. Asjade internett on ka siis, kui andur ja täitur on omavahel ühenduses, aga ülejäänud maailm ei tea sellest midagi. Samas tihti on asjade internet ka ühenduses maailmaga. Ühest küljest lihtne alustada. Täna on turul väga taskukohaselt olemas erinevaid andureid, mis mõõdavad erinevaid keskkonna parameetreid, näiteks temperatuuri. Samuti on olemas erinevaid täitureid, mis keskkonnas midagi füüsiliselt teevad. Näiteks mingi mootor liigutab akent.

Siiamaani oli lihtne. Keeruliseks võib asi minna, kui segada erinevate tootjate andureid ja täitureid. Lisaks on andurite ja täiturite vahel tarvis tarkvara, mis andurite andmed kogub ja vastavate reeglite põhjal käsib täituritel midagi teha. Näiteks, kui temperatuur on üle 25 kraadi, siis aken avada.

Koolis on asjade internetil kindlasti oma koht. Näiteks programmeerimise õpetamist muuta huvitavamaks. Samuti robootika edasijõudnutele, kus robotid omavahel suhtlevad ja sõltuvalt ühe roboti andmetest, teine robot teeb midagi.

Arvutimuuseum vol 2

Mina kõndisin virtuaalselt The National Museum of Computing’s. Kogemus oli nii ja naa – keskmine. Ilmselt oli mingi tehnoloogiaga muuseum läbi kõnnitud ja 3D’s muudetud. Kasutajakogemus suurem asi ei olnud. Samas mõne tuttava arvuti leidmine pakkus siiski rõõmu.

Kui nüüd teha ettepanekuid virtuaalse arvutimuuseumi loomiseks või üldse virtuaalmuuseumi, siis mina ootaks rohkem virtuaalset kogemust. Näiteks kasutades virtuaalreaalsuse riistvara võiks saada võimalikult vahetu kogemuse. Kui päris muuseumis eksponaate harilikult kätte võtta ei lubata, siis ka siin saab ilmselt virtuaalreaalsus sammu edasi astuda ja lubada arvuteid ka võtta ja neid lähemalt uurida. Ilmselt saab virtuaalkeskkonnas palju rohkem informatsiooni edastada võrreldes reaalse muuseumiga. Pärismaailmas on näiteks füüsiline pind, kuhu arvuteid panna, omaette piiranguks. Nagu ka hetkel Tartu arvutimuuseumi puhul.

Mida ma füüsilise muuseumi puhul hindan on see, et sinna saab minna koos sõpradega. Samuti on võimalus tutvuda uute inimestega. Ilmselt on seda täielikult võimatu virtuaalreaalsuse puhul asendada.

Arvutimuuseum osa 1

Oli aasta 89 kui ma oma Java 250 ja teksatagi maha müüsin, et sõbraga kahepeale arvuti osta. Panime raha ümbrikusse ja saatsime venemaa avarustesse. Paari kuu pärast saabus postkontorisse Venemaal toodetud Krista-2 arvuti. See oli algus.

Esimene välismaa arvuti, millega ma kokku puutusin oli Zinclair ZX Spectrum, mida saab uudistada ka Tartus asuvas arvutimuuseumis.

Pilt wikipeediast

Täna on ehk isegi veider mõelda, et programm tuli arvutisse laadida kassetmakilt, mis oli suurem kui arvuti.

Pilt googlest

Makist üksi oli vähe. Mingi asi pidi pilti ka näitama. Eraldi kuvareid kui selliseid me sellel ajal ei teadnud. Tuli tuttavaks saada kodus oleva teleri tagaküljega ja sinna taha tuli ise tinutada videosisend.

Samuti tuli tehtud programmid kassetile salvestada. Võite vaid ette kujutada seda tagavarakoopiate tegemist. Lisaks oli paras peavalu programmide leidmisega kassetilt. Pildil kujutataval makil oli kasseti pikkuse lugeja. Selle järgi kirjutasime paberile programmide algused.

Kui nüüd tulla tagasi ZX Spectrumi juurde, siis võrreldes Kristaga oli meie jaoks edasiminek tohutu. Basic programmeerimiskeel oli midagi, millele oli isegi raamatuid. Siinkohal on oluline rõhutada, et internnetiühendust meil veel ei olnud sellel ajal.

Antud isend ei olnud viimane ZX, millega ma kokku puutusin. Hiljem lisandus sinna ka floppy kettaga masin.

Sisuloome

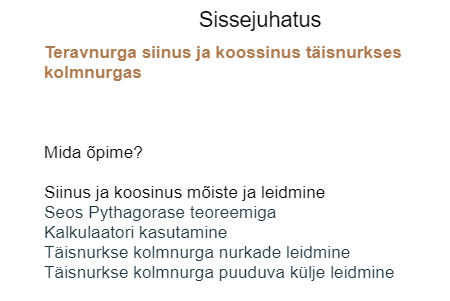

Ma olen tohutu desmos keskkonna austaja. Seega valisin oma sisuloome demonstreerimiseks just antud keskkonna.

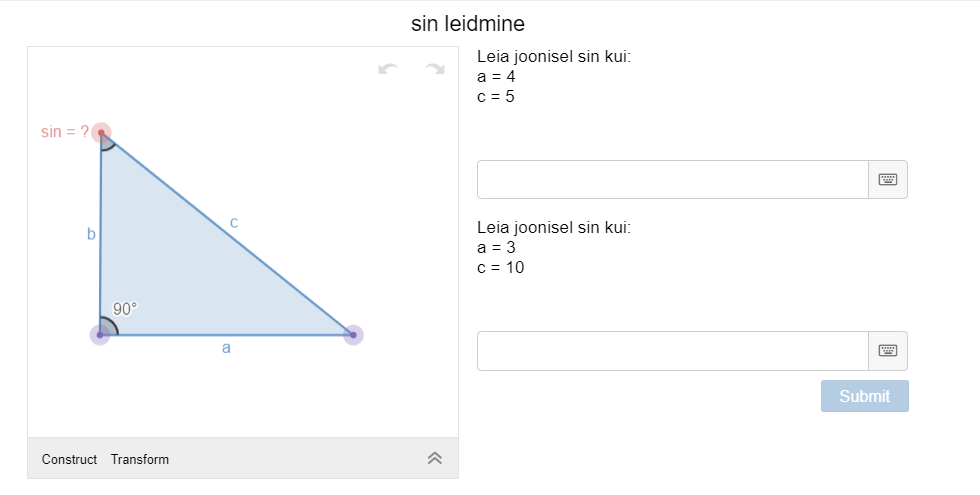

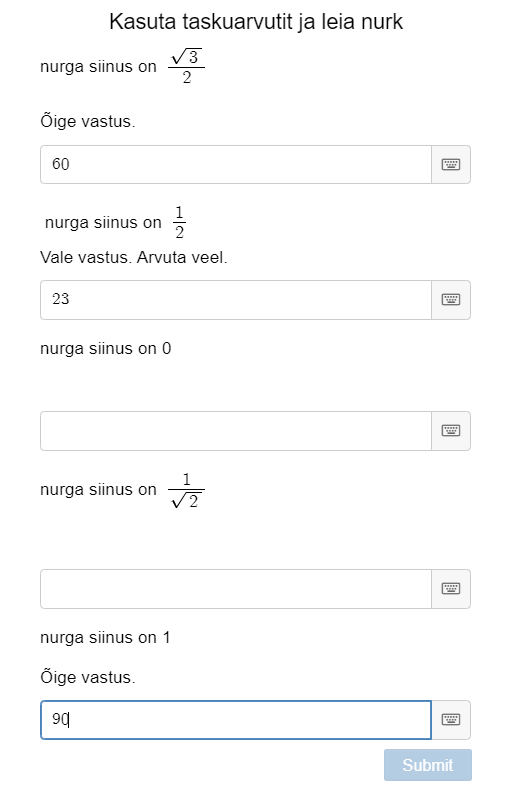

Lõin materjali, mis võimaldab selgitada teravnurga siinus ja koosinus mõisteid.

Sissejuhatuseks tutvustame eesootavat

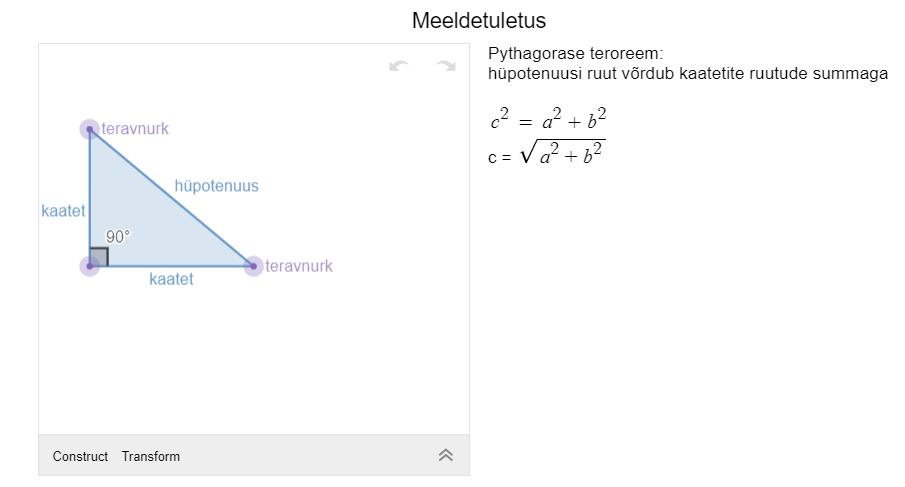

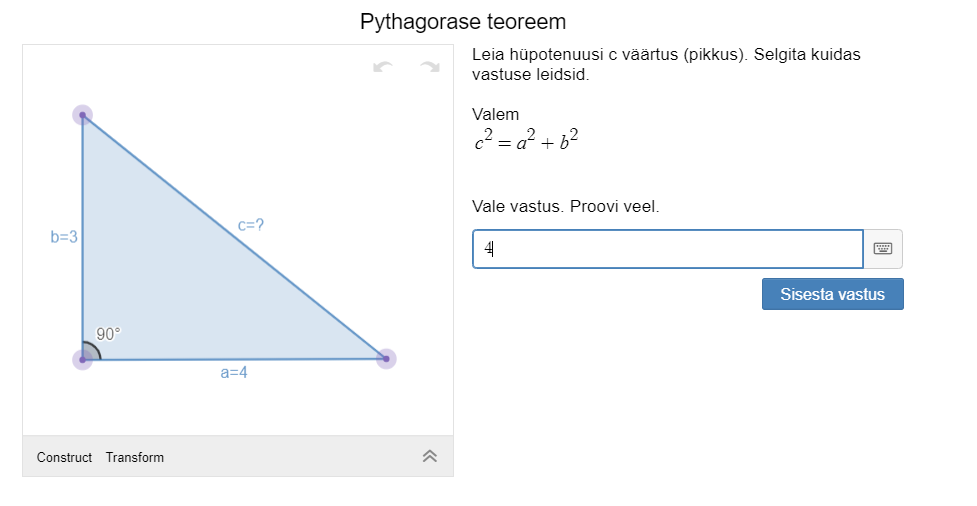

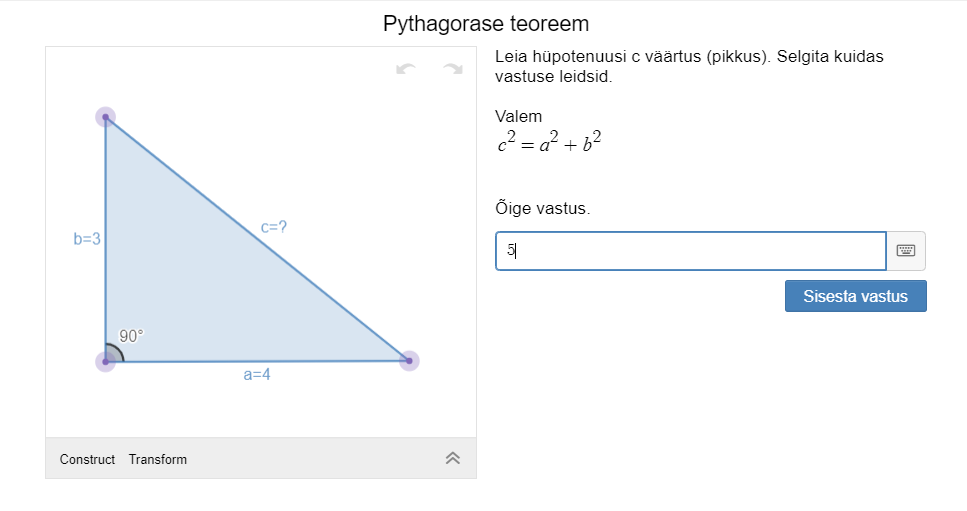

Tuletame varem õpitut, kuid siinkohal olulise uuesti meelde

Rakendame kohe ka meenutatut ja anname kohese tagasiside

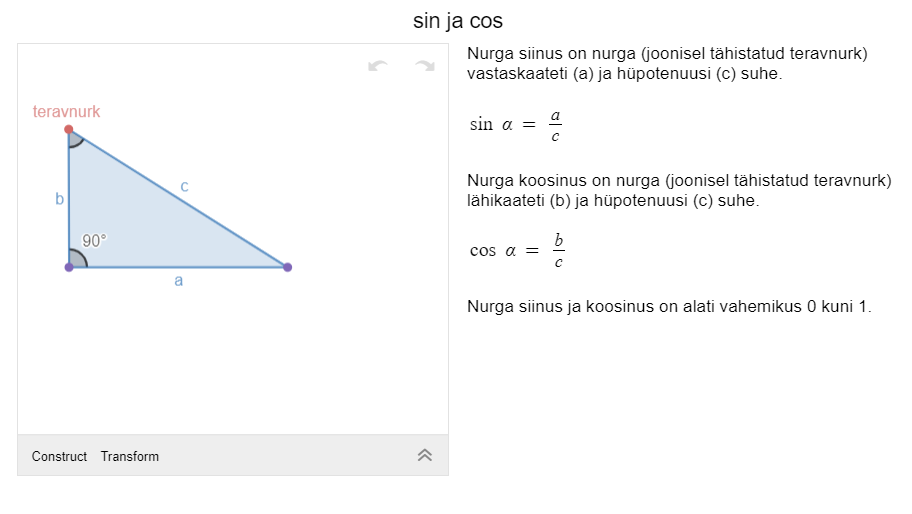

Selgitame uut informatsiooni ning toome näiteid.

Peale uue informatsiooni omandamist rakendame. Lisaks anname kohe ka tagasiside.

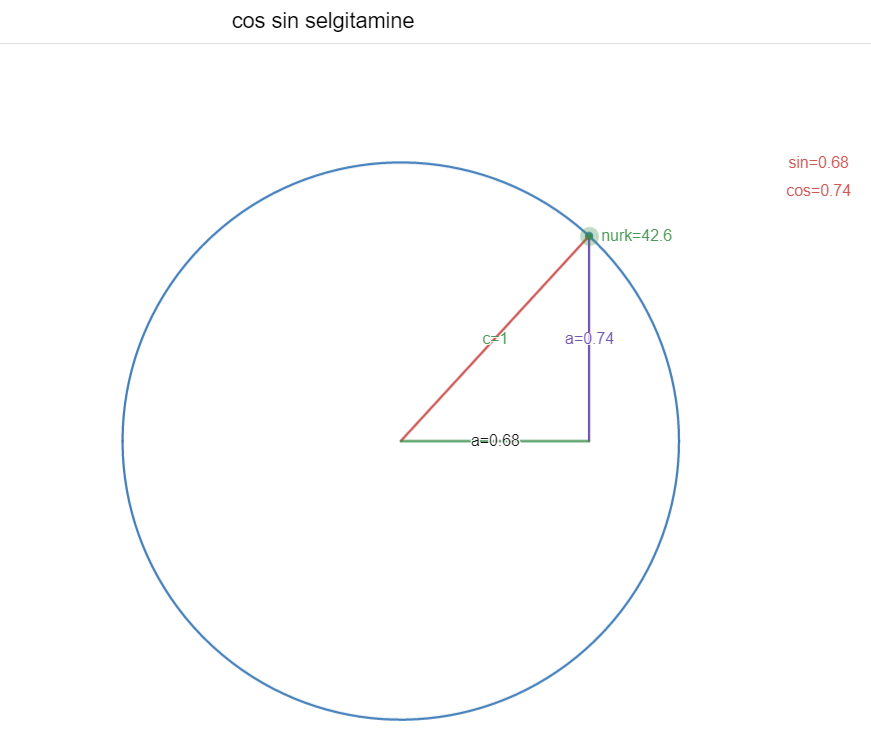

Kui esimene osa materjalist on omandatud, siis liigume edasi. Samuti selgitades ja tuues näiteid.

Kohe proovime ka õpitut rakendada. Lisame ka kohese tagasiside.

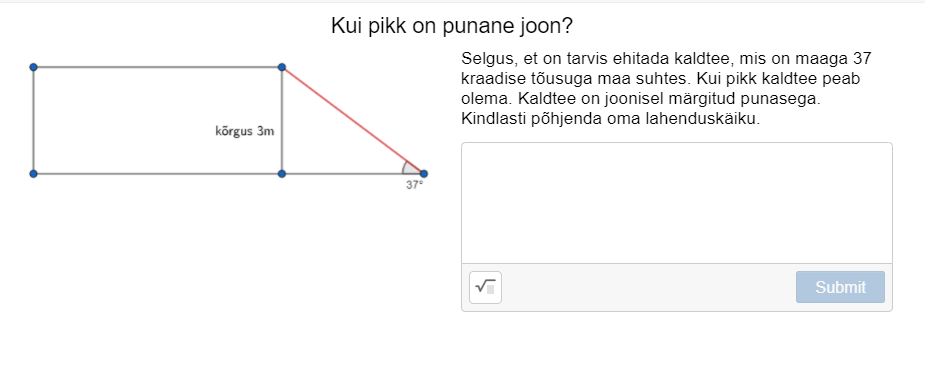

Lisame ka elulise probleemi

Desmos keskkond meeldib mulle eelkõige võimalusega õpilaste edenemist reaalaja lähedases ajas jälgida. Võimalus püüda kinni õpilaste vastuseid ja neile interaktiivselt vastata.

Liitreaalsus

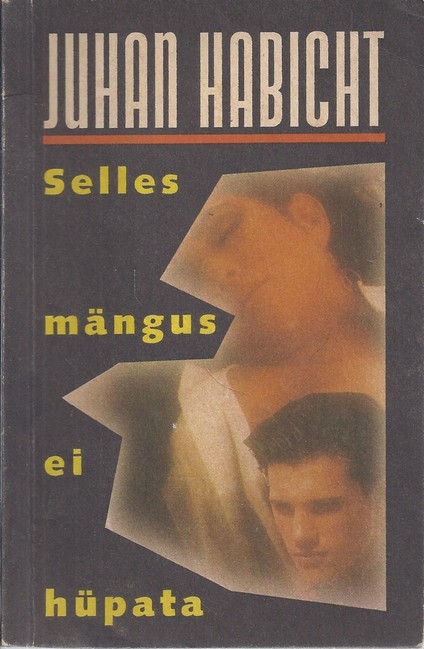

Ma ei julge väita, kas antud raamat on mind just kõige rohkem, aga kindlasti on tegu raamatuga, mis on minu edaspidist elu tugevalt mõjutanud. Üsna kindlalt võin väita, et antud raamat viis mind IT juurde.

AR pilt

Koolis on minu arust liitreaalsusel väga tugev koht. Pigem on raske leida valdkonda, kus ei võiks liitreaalsust kasutada.

Kasutuskohti leiab õpetaja oma materjalide ettevalmistamisel. Samuti õpilased oma projektide esitlemisel.

AR kasutamine koolis annab õpetajatele ja õpilastele juurde IT vahendite kasutamise mõõtme. Samuti annab AR juurde lugematul hulgal võimalusi uut moodi õpetada ja õppida.

Raske on leida midagi, mis AR kasutamisel midagi ära võtaks. Kui AR vahendeid kasutada tasakaalukalt, siis säilivad ka traditsioonilised vahendid nagu paber ja pastakas.

Küsimustik

Valisin oma küsimustiku koostamiseks Google Forms keskkonna. Google Forms on väga levinud ja pakub suurepäraseid võimalusi küsimusteke koostamiseks ja hiljem ka vastuste analüüsimiseks.

Küsimustik ise asub siin

Leian, et keskkond on piisavalt paindlik, et koostada õpilastele IT-alaseid küsimusteke. Tagasisidestamise võimalusi on erinevaid: tekstid, pildid, videod, lingid.

Samuti on võimalik hindeid (punkte) kujundada.

Erinevaid lisasid ma ei proovinud. Minu koostatud küsimustiku koostamine sujus hästi. Usun, et kasutan antud keskkonda ka edaspidi.

3D

Ma olen meeldivalt üllatunud, et 3DC.io eestlaste tehtud on.

Esimeseks katseks võtsin maja. Mida rohkem ma sellega tegeleda üritasin, seda hullemaks läks. Hea soovitus – salvesta vaheetapid.

3D printeri olemasolul koolis ilmselt tormaks paljud kohe printima, mis on iseenesest hea. Mõne aja pärast ilmselt õpiksime, et kõike ei saagi printida ja seeläbi saaksime sammu tagasi astuda ja mõelda, miks ei saa.

Seda, mida printida võiks arutelu käigus selguda. Selleks ajaks on ehk esimesed katsetused ka tehtud ja aru saadud, mida on mõtet ja mida mitte printida.

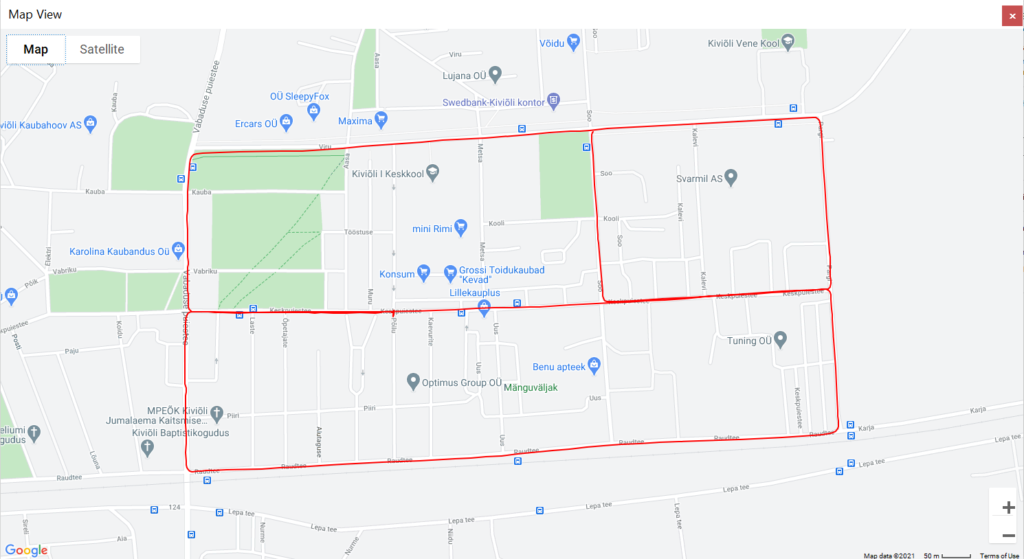

GPS kunst

Oli vaja luua GPS kunsti. Kindlasti kiiduväärt oskus, motiveerimaks lapsi väljas liikuma. Me teame kõik, mida Pockemon GO suutis teha.

Kuna koroona on mind suvilasse ajanud, siis ilusaid tänavaid, millest midagi luua polnud just ülearu. Suvekodu asub Kiviõli külje all. Vaatasin Googl Maps’t kaarti ja tundus, et ruutude vahe valemi graafiline esitlus vaatas mingit moodi mulle vastu.

Seadmeks, millega ma jälge märkisin, kasutasin oma langevarjunduses kasutatavat seadet FlySight